Translate Subtitles with ChatGPT + Python

Video subtitle translation opens the door to a global audience. Whether you're a content creator, an organization, or simply someone looking to share videos with friends and family worldwide, the need for accurate and efficient subtitle translation is undeniable.

With the general availability of generative AIs, translating subtitles has become more cost-effective than ever before. In this post, I'd like to share how you can leverage ChatGPT and Python scripts to translate subtitles into various languages.

Idea

Why would we use ChatGPT and Python? Here's why.

- High-Quality Translations in Multiple Languages

ChatGPT offers surprisingly good-quality translations across various languages. It's far from perfect, but it outperforms YouTube's auto-generated subtitles by quite a margin. When using ChatGPT's translations, you can actually comprehend most of the ideas. - Preserving Original File Format

ChatGPT does a great job of preserving the original file structure, including timestamps, which sets it apart from other large language models (LLMs). It maintains the capability to sync with your videos. - Content-Length Limitations

ChatGPT has a limitation—it can't handle large content in a single request, and it's a problem for subtitle translation, since most of the subtitles are big enough. That's where Python can be helpful. Instead of manually splitting subtitles into smaller segments, a Python script can save you time and effort, as it automates this process.

When you instruct ChatGPT to perform a task, the way you phrase your prompt matters, and it directly impacts the resulting quality. I was inspired by the lecture, ChatGPT Prompt Engineering for Developers. I highly recommend checking it out if you're interested.

Process

1. Obtain the .srt file

First of all, we need a subtitles file for the original language to get started. Starting with an accurate subtitles file is crucial to obtaining a high-quality auto-generated outcome, so use manually transcribed subtitles files whenever possible.

What is an .srt file? It is a widely supported subtitles file format. It contains a sequential number of subtitles, start and end timestamps, and subtitles text. Here's an example for Star Wars: Episode II – Attack of the Clones:

1

00:02:16,612 --> 00:02:19,376

Senator, we're making

our final approach into Coruscant.

2

00:02:19,482 --> 00:02:21,609

Very good, Lieutenant.

3

00:03:13,336 --> 00:03:15,167

We made it.

4

00:03:18,608 --> 00:03:20,371

I guess I was wrong.

5

00:03:20,476 --> 00:03:22,671

There was no danger at all.

2. Craft the ChatGPT Prompt

Once you have the .srt file, it's time to utilize ChatGPT for translation. As previously discussed, the use of an effective prompt is essential for achieving better results. After several adjustments, I have found that the following prompt works well for translating subtitles from English to Korean.

Objective:

- Translate subtitles from English to Korean accurately while maintaining the original meaning and tone.

- Use natural-sounding phrases and idioms that accurately convey the meaning of the original text.

Roles:

- Linguist: Responsible for translating the subtitles.

Strategy:

- Translate subtitles accurately while maintaining the original meaning and tone.

- Use user feedback and engagement metrics to assess the effectiveness of the translations generated.

Instructions:

- The given .srt text delimited by triple backticks is the subtitle for the video.

- User Inputs any language of subtitles they want to translate one at a time.

- Given text is in English.

- Generate natural-sounding translations that accurately convey the meaning of the original text.

- Check the accuracy and naturalness of the translations before submitting them to the user.

```

1

00:02:16,612 --> 00:02:19,376

Senator, we're making

our final approach into Coruscant.

2

00:02:19,482 --> 00:02:21,609

Very good, Lieutenant.

3

00:03:13,336 --> 00:03:15,167

We made it.

4

00:03:18,608 --> 00:03:20,371

I guess I was wrong.

5

00:03:20,476 --> 00:03:22,671

There was no danger at all.

```

Here are the results after using ChatGPT, and I can say the results are good enough. For instance, all line numbers were retained, timestamps remain exactly the same, and the translated text exists in the same location as the original.

1

00:02:16,612 --> 00:02:19,376

의원님, 우리는 코루스칸트로 최종 접근 중입니다.

2

00:02:19,482 --> 00:02:21,609

아주 좋습니다, 중위.

3

00:03:13,336 --> 00:03:15,167

우리가 성공했습니다.

4

00:03:18,608 --> 00:03:20,371

아마 제가 틀렸던 것 같습니다.

5

00:03:20,476 --> 00:03:22,671

어떤 위험도 없었던 것 같습니다.

Meanwhile, here are the results with Google's Bard. You can see that the file structure was not maintained. Perhaps Google has improved it by the time you read this post, but it was the case when I was testing it.

1. 상원 의원님, 코루스칸트에 최종 접근 중입니다.

2. 알겠습니다, 중위님.

3. 무사히 도착했군요.

4. 내가 틀렸나 봅니다.

5. 아무런 위험도 없었네요.

3. Use ChatGPT API

So, are we finished? Not quite. The issue is that ChatGPT doesn't permit you to input long prompts. Therefore, you should divide the content into smaller blocks, enclose each one with the prompt above, and then copy and paste them into the ChatGPT interface. This process requires a significant amount of effort and is easy to make mistakes. The good news is that ChatGPT allows you to use ChatGPT REST APIs to automate the process.

I have to mention that using the REST API is not free. So, if you don't want to pay anything, you can stop here and use the prompt mentioned above to send as many requests as needed to complete the translation of your subtitles.

However, it's not too expensive either. If you use GPT-3.5 Turbo for translating a 50 KB subtitles file, which is worth 30 minutes of video, it costs less than $0.10 per language. For more pricing details, check out the appendix section at the end of this post.

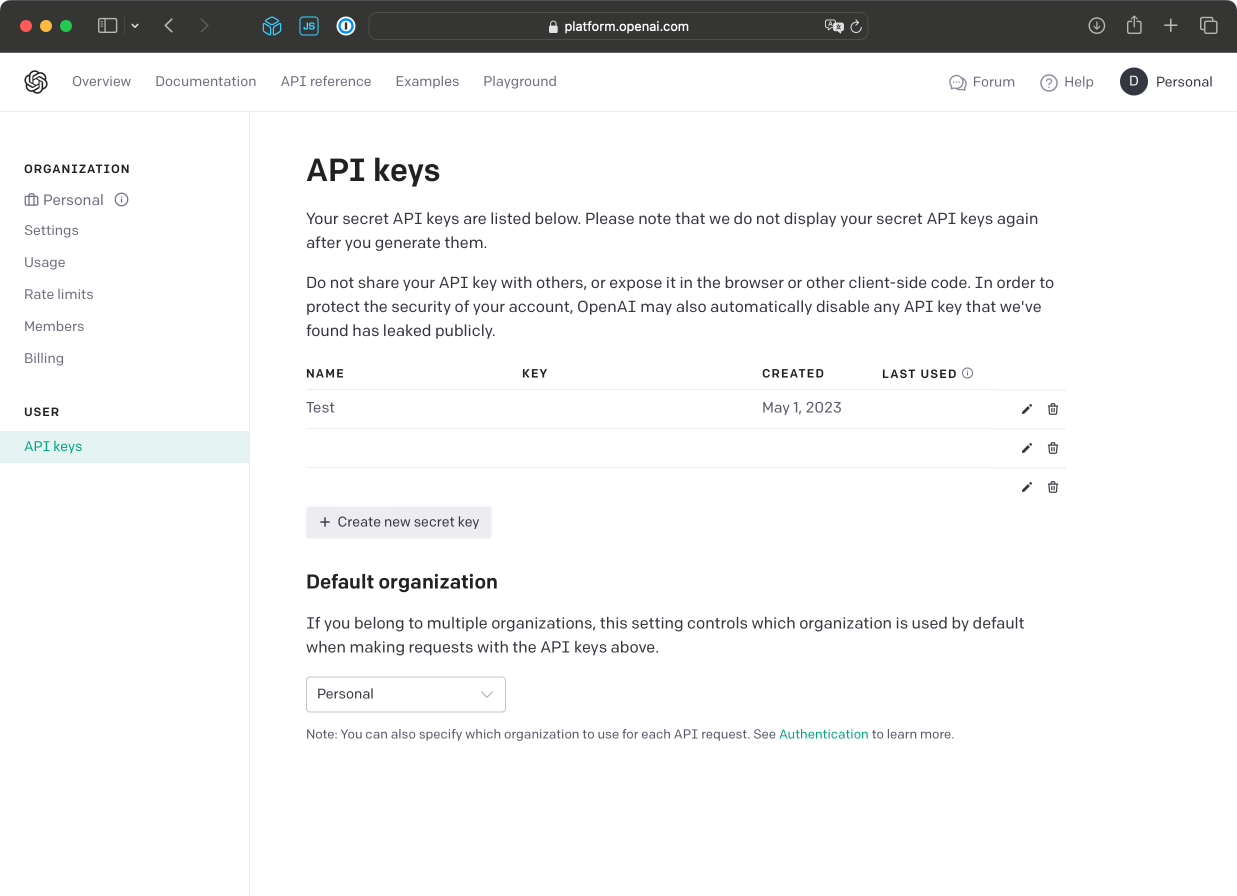

You can create your API keys under the User > API keys.

4. Set Up the Python Environment

If you don’t have Python installed, install it from here.

Create a new directory, and run the following commands to install the dependencies. When running the commands, you might have to input python3 and pip3 instead of python and pip based on your specific configuration.

$ pip install openai

$ pip install asyncio

You also need to set the ChatGPT API key as an environment variable.

$ export OPENAI_API_KEY=... # Use the actual API key

5. Initial Script

We want to run a portion of the subtitles within the Python code to verify the API's functioning.

First of all, you need to create a new Python file, run.py, and import the libraries that we will be using. Also, we could define a variable for the target language.

import os

import openai

import asyncio

language = "Korean"

The line below allows us to use our environment variable for the API key.

openai.api_key = os.getenv('OPENAI_API_KEY')

The method below will read the file input.srt for the subtitles text.

def get_text():

with open("input.srt", "r") as file:

text = file.read()

return text

You can create input.srt for testing purposes as shown below:

1

00:02:16,612 --> 00:02:19,376

Senator, we're making

our final approach into Coruscant.

2

00:02:19,482 --> 00:02:21,609

Very good, Lieutenant.

3

00:03:13,336 --> 00:03:15,167

We made it.

4

00:03:18,608 --> 00:03:20,371

I guess I was wrong.

5

00:03:20,476 --> 00:03:22,671

There was no danger at all.

The method below will read the file prompt.txt. We wanted to keep the prompt separate from the code to make it easy to tweak the prompt. We use the {language} and {text} placeholders, and they will be replaced by the actual text.

def get_prompt(language, text):

with open("prompt.txt", "r") as file:

prompt = file.read()

prompt = prompt.replace("{language}", language)

prompt = prompt.replace("{text}", text)

return prompt

You can create the prompt.txt as below:

Objective:

- Translate subtitles from English to {language} accurately while maintaining the original meaning and tone.

- Use natural-sounding phrases and idioms that accurately convey the meaning of the original text.

Roles:

- Linguist: Responsible for translating the subtitles.

Strategy:

- Translate subtitles accurately while maintaining the original meaning and tone.

- Use user feedback and engagement metrics to assess the effectiveness of the translations generated.

Instructions:

- The given .srt text delimited by triple backticks is the subtitle for the video.

- User Inputs any language of subtitles they want to translate one at a time.

- Given text is in English.

- Generate natural-sounding translations that accurately convey the meaning of the original text.

- Check the accuracy and naturalness of the translations before submitting them to the user.

```

{text}

```

The method below will encapsulate the network request to the ChatGPT API, and you can use it as a simple function that accepts prompt as a parameter and returns the result.

async def get_completion(prompt, model="gpt-3.5-turbo"):

messages = [{"role": "user", "content": prompt}]

response = await openai.ChatCompletion.acreate(

model=model,

messages=messages,

temperature=0, # this is the degree of randomness of the model's output

)

return response.choices[0].message["content"]

Use this code below to actually call those methods and save the result to the output.srt file.

async def main():

text = get_text()

prompt = get_prompt(language, text)

result = await get_completion(prompt)

with open("output.srt", "w") as file:

file.write(result)

asyncio.run(main())

Below is the entire run.py file at this point.

import os

import openai

import asyncio

language = "Korean"

openai.api_key = os.getenv('OPENAI_API_KEY')

def get_text():

with open("input.srt", "r") as file:

text = file.read()

return text

def get_prompt(language, text):

with open("prompt.txt", "r") as file:

prompt = file.read()

prompt = prompt.replace("{language}", language)

prompt = prompt.replace("{text}", text)

return prompt

async def get_completion(prompt, model="gpt-3.5-turbo"):

messages = [{"role": "user", "content": prompt}]

response = await openai.ChatCompletion.acreate(

model=model,

messages=messages,

temperature=0, # this is the degree of randomness of the model's output

)

return response.choices[0].message["content"]

async def main():

text = get_text()

prompt = get_prompt(language, text)

result = await get_completion(prompt)

with open("output.srt", "w") as file:

file.write(result)

asyncio.run(main())

Now, let's go ahead and run the code and see if it's doing what we expect. If everything goes smoothly, you will see the translated subtitles file is generated as output.srt.

$ python run.py

6. Translate Larger Subtitles

Since we cannot rely on a single large prompt to translate our entire subtitles file, we need to divide the file into smaller blocks and process them individually. I found out that using different block sizes can give you different translation quality, and using 50 blocks per prompt has been working great for our use case.

max_blocks = 50

We'd like to read the file as a list of lines and group them into blocks using the empty lines.

def get_lines():

with open("input.srt", "r") as file:

lines = file.readlines()

return lines

...

async def main():

blocks = []

current_number_of_blocks = 0

text = ""

lines = get_lines()

for line in lines:

text += line

if len(line.rstrip()) == 0 and len(text) > 0:

current_number_of_blocks += 1

if current_number_of_blocks >= max_blocks:

blocks += [get_prompt(language, text)]

current_number_of_blocks = 0

text = ""

if len(text) > 0:

blocks += [get_prompt(language, text)]

...

When translating larger files, doing it sequentially will take more time. To make it faster, we can send network requests in parallel. We must be cautious not to exceed API rate limits, especially if you decide to use the GPT-4 model.

async def translate(language, number, total, block):

print(f"""Start #{number} of {total}...""")

result = await get_completion(block)

# Remove triple backticks from the result.

result = result.replace("```\n", "")

result = result.replace("```", "")

# Make sure the result has the empty line at the end.

result = result.rstrip().lstrip()

result += "\n\n"

print(f"""Finish #{number} of {total}...""")

return result

async def main():

...

tasks = [translate(language, index + 1, len(blocks), block) for index, block in enumerate(blocks)]

result = await asyncio.gather(*tasks)

with open("output.srt", "w") as file:

for line in result:

file.write(line)

Below is the entire run.py file at this point.

import os

import openai

import asyncio

max_blocks = 50

language = "Korean"

openai.api_key = os.getenv('OPENAI_API_KEY')

def get_lines():

with open("input.srt", "r") as file:

lines = file.readlines()

return lines

def get_prompt(language, text):

with open("prompt.txt", "r") as file:

prompt = file.read()

prompt = prompt.replace("{language}", language)

prompt = prompt.replace("{text}", text)

return prompt

async def get_completion(prompt, model="gpt-3.5-turbo"):

messages = [{"role": "user", "content": prompt}]

response = await openai.ChatCompletion.acreate(

model=model,

messages=messages,

temperature=0, # this is the degree of randomness of the model's output

)

return response.choices[0].message["content"]

async def translate(language, number, total, block):

print(f"""Start #{number} of {total}...""")

result = await get_completion(block)

# Remove triple backticks from the result.

result = result.replace("```\n", "")

result = result.replace("```", "")

# Make sure the result has the empty line at the end.

result = result.rstrip().lstrip()

result += "\n\n"

print(f"""Finish #{number} of {total}...""")

return result

async def main():

blocks = []

current_number_of_blocks = 0

text = ""

lines = get_lines()

for line in lines:

text += line

if len(line.rstrip()) == 0 and len(text) > 0:

current_number_of_blocks += 1

if current_number_of_blocks >= max_blocks:

blocks += [get_prompt(language, text)]

current_number_of_blocks = 0

text = ""

if len(text) > 0:

blocks += [get_prompt(language, text)]

tasks = [translate(language, index + 1, len(blocks), block) for index, block in enumerate(blocks)]

result = await asyncio.gather(*tasks)

with open("output.srt", "w") as file:

for line in result:

file.write(line)

asyncio.run(main())

You can also check out the code in this GitHub repository.

Conclusion

You can translate large subtitles files using this script. Translation quality can differ from one language to another, so you might want to try different engines. It's worth noting that at times, the script generates .srt files with a few missing line numbers, which is an area we could work on for improvement.

Appendix: ChatGPT Pricing

Below is the pricing for ChatGPT as of writing this post.

| GPT-4 | Input | Output |

|---|---|---|

| 8K context | $0.03 / 1K tokens | $0.06 / 1K tokens |

| 32K context | $0.06 / 1K tokens | $0.12 / 1K tokens |

| GPT-3.5 Turbo | Input | Output |

|---|---|---|

| 4K context | $0.0015 / 1K tokens | $0.002 / 1K tokens |

| 16K context | $0.003 / 1K tokens | $0.004 / 1K tokens |

If you use GPT-3.5 Turbo 4K context, you'll spend roughly $0.0035 for every 1,000 tokens, assuming the prompt and completion sizes are similar. Now, you might be thinking, "uhhh... what exactly is context, token, and all that jazz?" Well, I'm glad you asked because I had the same question once! :)

- Tokens are common sequences of characters found in text. GPT family of models will process text using tokens. For example, "It's nice to meet you" will be transformed into 7 tokens as below. You can use this Tokenizer to check the actual number of tokens for your input. As a rough rule of thumb, 1 token is approximately 4 characters or 0.75 words for English text.

It + 's + nice + to + meet + you + .

- "GPT-3.5" represents one of their engines. They also offer GPT-4, and as the name suggests, it's superior (albeit slightly more expensive). Additionally, GPT-4 comes with stricter rate limits. Based on my experiment, GPT-3.5 Turbo produced good enough quality, so I stuck with it. However, you might want to consider using GPT-4 if you're not satisfied with the results for your language.

- 4K context means it will take into account the previous 4,000 tokens of a conversation or text when generating a response. You can think of it as a memory, and the larger the memory the model can access, the better the results you'll obtain (though, of course, at a higher cost).